Azure Face Detection API Integration

Microsoft Azure is a cloud computing service that has its own machine learning service, known as Cognitive Services. It splits into five categories: Vision, Speech, Language, Knowledge, and Search, with each category containing several tools like Computer Vision, Content Moderator, Custom Vision Service, Emotion API, Face API, and Video Indexer. Face API has two main functions:

Face Detection

Detect human faces in an image, return face rectangles, and optionally with face Ids, landmarks, and attributes.

- Optional parameters including face Ids, landmarks, and attributes. Attributes include age, gender, head pose, smile, Facial Hair, glasses, emotion, hair, makeup, occlusion, accessories, blur, exposure, and noise.

- Face Id will be used in Face – Identify, Face – Verify, and Face – Find Similar. It will expire 24 hours after the detection call.

- Higher face image quality means better detection and recognition precision. Please consider high-quality faces: frontal, clear, and face size is 200×200 pixels (100 pixels between eyes) or bigger.

- JPEG, PNG, GIF (the first frame), and BMP format are supported. The allowed image file size is from 1KB to 6MB.

- Faces are detectable when its size is 36×36 to 4096×4096 pixels. If need to detect very small but clear faces, please try to enlarge the input image.

- Up to 64 faces can be returned for an image. Faces are ranked by face rectangle size from large to small.

- Face detector prefer frontal and near-frontal faces. There are cases that faces may not be detected, e.g. exceptionally large face angles (head-pose) or being occluded, or wrong image orientation.

- Attributes (age, gender, headPose, smile, facial Hair, glasses, emotion, hair, makeup, occlusion, accessories, blur, exposure and noise) may not be perfectly accurate. HeadPose’s pitch value is a reserved field and will always return 0.

Face Recognition

Takes faces and performs comparisons to determine how well they match. Has four categories –

- Face Verification – takes two detected faces and attempts to verify that they match

- Finding Similar Face – takes candidate faces in a set and orders their similarity to a detected face from most similar to least similar

-

Face Grouping – takes a set of unknown faces and divides them into subset groups based on similarity. For a subset of the original set of unknown faces, each face within that subset is considered to be the same person object (based on a threshold value).

-

Face Identification – Face API can be used to identify people based on a detected face and a people database (defined as a Large Person Group/PersonGroup). Create this database in advance, which can be edited over time.

-

Face Storage – Face Storage allows a Standard subscription to store additional persisted faces when using LargePersonGroup/PersonGroup Person objects (PersonGroup Person – Add Face/LargePersonGroup Person – Add Face) or Large FaceLifts/FaceLists (FaceList – Add Face/LargeFaceList – Add Face) for identification or similarity matching with the Face API.

Face Identification

With Face Identification, you must first create a PersonGroup object. That Person Group object contains one or more person objects. Each person object contains one or more images that represent the respective person object. As the number of face images a person object contains increases, so does the identification accuracy.

For example, let’s say that you create a PersonGroup object called “co-workers.” In co-workers, you create person objects, for example, you might create two – “Alice” and “Bob.” Face images are assigned to their respective person objects. You have now created a database with which to compare a detected face image. An attempt will be made to find out if the detected image is Alice or Bob (or neither), based on a numerical threshold.

This threshold is on a scale that is most permissive at 0 and most restrictive at 1. At 1, they must be perfect matches – by perfect, I mean that two identical images at different compression rates will not be recognized as a match. In contrast, at 0 a match will be returned for the person object with the highest confidence score regardless. In my experiments, somewhere between 0.3 -0.35 tended to strike a good balance. To reiterate an earlier point, more images per person object increases identification accuracy, thus decreasing both false positives and false negatives.

Emotion Recognition

The Emotion API beta takes an image as an input and returns the confidence across a set of emotions for each face in the image, as well as a bounding box for the face from the Face API. The emotions detected are happiness, sadness, surprise, anger, fear, contempt, disgust, or neutral. These emotions are communicated cross-culturally and universally via the same basic facial expressions, where are identified by Emotion API.

Interpreting Results:

In interpreting results from the Emotion API, the emotion detected should be interpreted as the emotion with the highest score, as scores are normalized to sum to one. Users may choose to set a higher confidence threshold within their application, depending on their needs.

For more information about emotion detection, see the API Reference:

- Basic: If a user has already called the Face API, they can submit the face rectangle as an input and use the basic tier. API Reference

- Standard: If a user does not submit a face rectangle, they should use standard mode. API Reference

How Azure Cognitive Services Identify People

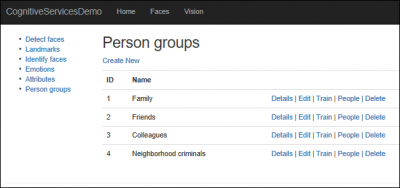

Face API has its own mechanism to identify people of photos. In short, it needs a set of analyzed photos about people to identify them.Before identifying people, we need to introduce their faces to cognitive services. With our brand new cognitive services account, we start with creating a Person group. We may have groups like Family, Friends, Colleagues, etc. After creating a group, we add people to the group and introduce up to 10 photos per person to Cognitive Services. After, this group must be “trained.” Then, we’ll be ready to identify people on photos.

Training means that the cloud service analyzes face characteristics detected before and creates some mystery to better identify these people in photos. After adding or removing photos, a person group must be trained again. Here is the example of points that define a face for Face API. It’s possible to draw polyline through sets of points to draw out parts of the face like lips, nose, and eyes

Getting Started With Face API

Before using Cognitive Services, we need access to Microsoft Azure and we need a Cognitive Services Faces API account. Faces API has a free subscription that offers more API calls than it is really needed to get started and build the first application that uses face detection.

Code alert! I am working on sample application called CognitiveServicesDemo for my coming speaking engagements. Although it is still pretty raw, it can be used to explore and use the Faces API of Cognitive Services.

After creating a Face API account, there are API keys available. These keys with a Face API service endpoint URL are needed to communicate with Face API in a sample application.

Person Groups and People

Here are some screenshots from my sample application. The first one shows a list of Person groups and the other one, people in the Family group.The Family group has some photos and it is trained already. Training is easy to do in code. Here is the controller action that takes the Person Group ID and let’s Face ServiceClient train the given person group. Most of the calls to Face API are simple ones and don’t get very ugly. Of course, there are few exceptions, like always.

Identifying People

To identify people, I use one photo that was taken in in Chisinau, Moldova when my daughter was very small.To identify who is in the photo, three service calls are needed:

- Detect faces from the photo and save face rectangles.

- Identify faces from given person group based on detected face rectangles.

- Find names of people from person group.

Identifying is a little bit crucial as we don’t always get exact matches for people but also so called candidates. It means that identifying algorithm cannot make accurate decision which person of two or three possible candidates is shown in face rectangle.

Here is the controller action that does the identification. If it is a GET request, then the form with an image upload box and person groups selection is shown. In the case of POST, it is expected that there is an image to analyze. I did some base controller magic to have a copy of the uploaded image available for requests automagically. RunOperationOnImage is the base controller method that creates a new image stream and operates on it because Face API methods dispose given image streams automatically.Here is the result of identifying people by my sample application. Me, my girlfriend, and our minion were all identified successfully.My sample application also introduces how to draw rectangles of different colors around detected faces. So, take a look at it.

At SPGON face recognition technology and applications are developed by our experienced team. Facial recognition is a technology with unbounded potential, even if it is yet to be fully realized. We are focused on developing powerful technologies which allow us to offer solutions and provide real-time business benefits. Our developers provide advanced technologies such as Face recognition, fingerprint, palm recognition, Iris recognition. For more information regarding our services and solutions Contact Us or drop us a line at info@spgon.com

Author :Sai Ram Pandiri – Web Developer

Source :https://docs.microsoft.com/en-in/azure/cognitive-services/face